Top AI experts say AI poses an

extinction risk on par with nuclear war. Top AI experts say AI

poses an extinction risk

on par with nuclear war. Top AI experts say AI

poses an extinction risk

on par with nuclear war.

Prohibiting the development of superintelligence can prevent this risk.

What are experts warning about?

In 2023, hundreds of the foremost AI experts came together to warn that:

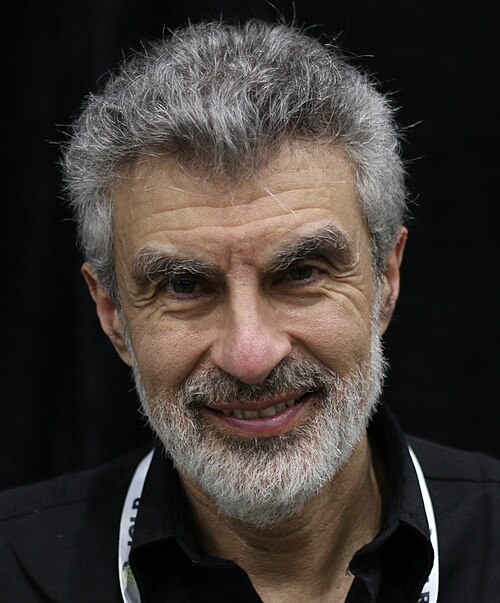

The signatories included the world's 3 most-cited AI researchers:

...and even the CEOs of the leading AI companies:

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

- Statement on AI Risk, CAIS

In 2023, hundreds of the foremost AI experts came together to warn that:

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

- Statement on AI Risk, CAIS

The signatories included the world's 3 most-cited AI researchers:

...and even the CEOs of the leading AI companies:

In 2023, hundreds of the foremost AI experts came together to warn that:

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

- Statement on AI Risk, CAIS

The signatories included the world's 3 most-cited AI researchers:

...and even the CEOs of the leading AI companies:

What can we do?

CAMPAIGN SUPPORT

Lord Browne of Ladyton

Former UK Secretary of State for Defence

"History shows that every breakthrough technology reshapes the balance of power. With Superintelligent AI, the risks of unsafe development, deployment, or misuse could be catastrophic—even existential—as digital intelligence surpasses human capabilities. Control must come first, or we risk creating tools that outpace our ability to govern them."

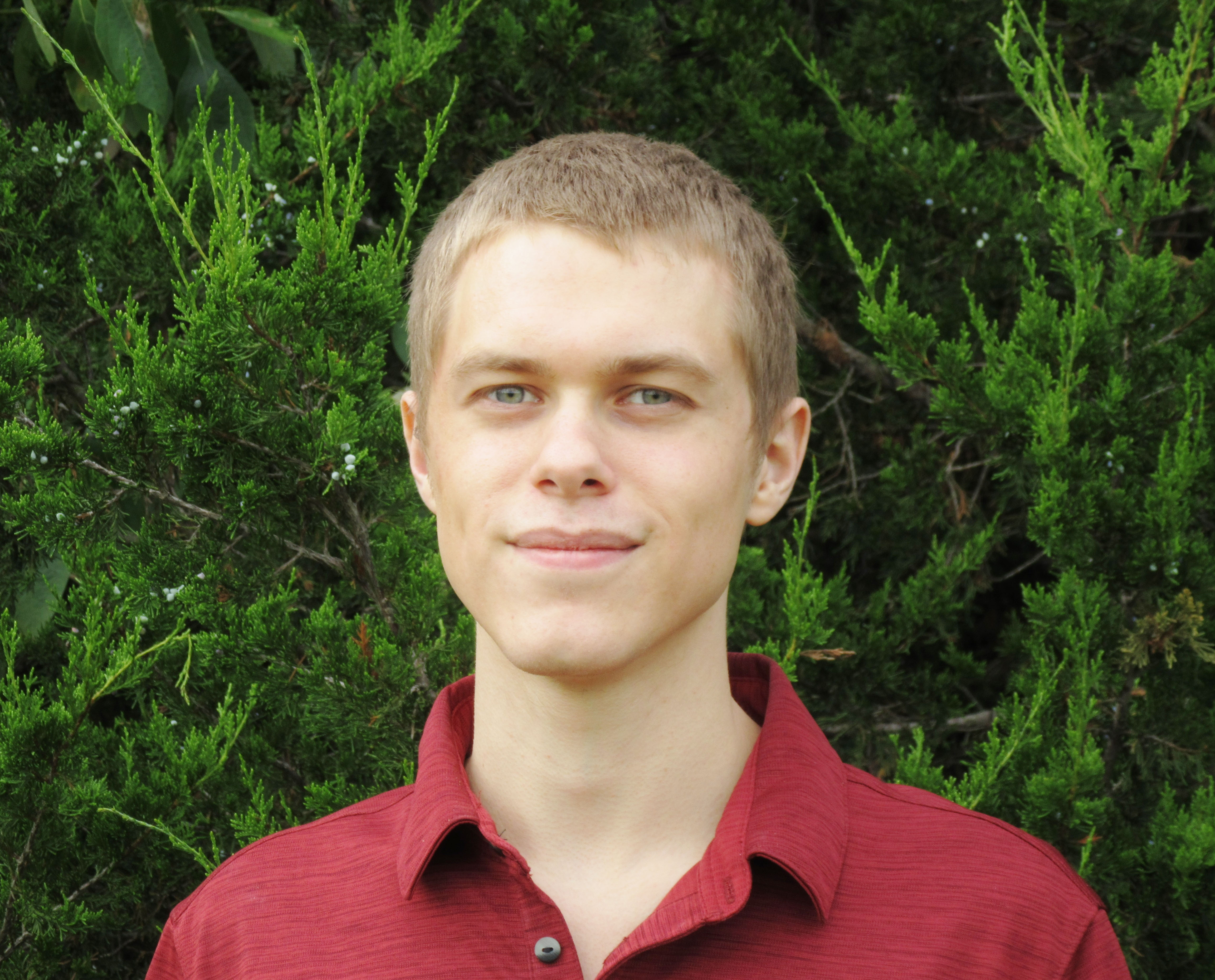

Steven Adler

Ex-OpenAI Dangerous Capability Evaluations Lead

"I worked on OpenAI's safety team for four years, and I can tell you with confidence: AI companies aren't taking your safety seriously enough, and they aren't on track to solve critical safety problems. This is despite an understanding from the leadership of OpenAI and other AI companies that superintelligence, the technology they're building, could literally cause the death of every human on Earth."

Roman Yampolskiy

Professor of Computer Science, University of Louisville

"My life's work has been devoted to Al safety and cybersecurity, and the core truths l've uncovered are as simple as they are terrifying: We cannot verify systems that evolve their own code. We cannot predict entities whose intelligence exceeds our own. And we cannot control what lies beyond our comprehension. Yet the global research community, driven by competition and unrestrained ambition, is rushing headlong to construct precisely such systems. Unless we impose hard limits, now, on the development of recursively self-improving Al, we are not merely risking catastrophic failure. We are engineering our own extinction."

Yuval Noah Harari

Historian, Philosopher, and Best-Selling Author of 'Sapiens'

"We will not be able to understand a superintelligent AI. It will make its own decisions, set its own goals, and to achieve them it might trick and deceive humans in ways we cannot hope to understand. We are the Titanic headed towards the iceberg. In fact, we are the Titanic busy creating the iceberg. Let's change course and build a future that keeps humans in control."

Anthony Aguirre

Executive Director of FLI, Professor UC Santa Cruz

"At the Future of Life Institute, we've united leading scientists to address humanity's greatest threats. None is more urgent than uncontrolled AI. If we're going to build superintelligent AI, we'd need to ensure that it is safe, controllable, and actually desired by the public first."

Stuart Russell

Professor of Computer Science, UC Berkeley

"To proceed towards superintelligent AI without any concrete plan to control it is completely unacceptable. We need effective regulation that reduces the risk to an acceptable level. Developers cannot currently comply with any effective regulation because they do not understand what they are building."

Dan Hendrycks

Director of the Center for AI Safety, Advisor for xAI and Scale

"We seriously risk human extinction by building superintelligence. We don’t know of any way to prevent extinction on the current path to superintelligence."